Part 1: NASA Crater Detection

This is Part 1 of a two-part series on crater detection using deep learning. Read Part 2 →

Introduction 🚀

As cislunar space grows increasingly crowded, spacecraft face heightened risks during navigation. Traditionally, space missions rely on Earth-based communication to determine their location. But what happens when communication is lost?

To solve this, NASA is developing TRON (Target & Range-adaptive Optical Navigation) — a next-gen system that allows spacecraft to determine their position independently, without Earth’s help.

TRON uses two methods:

- Planetary Triangulation for long-range navigation by measuring angles between celestial bodies.

- Crater-Based Localization for close-range navigation by detecting and matching craters seen in images with pre-mapped craters on the lunar surface.

This project focuses on crater detection — a key component of crater-based localization. Accurate crater recognition helps spacecraft understand their exact position, enabling autonomous operation in deep space.

📺 Watch a 1-minute project summary:

Project Objective 🌕

The goal is to build a system that automatically detects lunar craters in spacecraft images and fits an ellipse around each crater’s rim.

This is challenging due to:

- Visual complexity: Craters vary in size, lighting, and visibility.

- Hardware constraints: Must run on CPU (no GPU), under 20s per 5MP image, < 4GB RAM (like a Raspberry Pi 5).

These demand fast, lightweight deep learning models that can handle real-world noise and variability.

Literature Review 🧠

Early crater detection used edge detection and hand-crafted features, but struggled with shape and lighting variations.

Modern deep learning approaches:

- Single-stage (e.g., YOLO, SSD) for speed.

- Two-stage (e.g., Faster R-CNN, Mask R-CNN) for precision.

We compare:

- YOLOv10: Fast, efficient real-time bounding box detector.

- Ellipse R-CNN: Detects and fits elliptical shapes to crater rims.

Dataset 📸

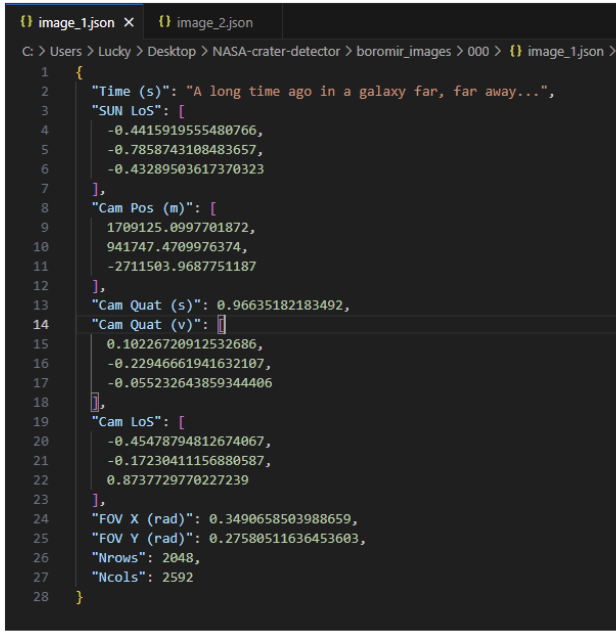

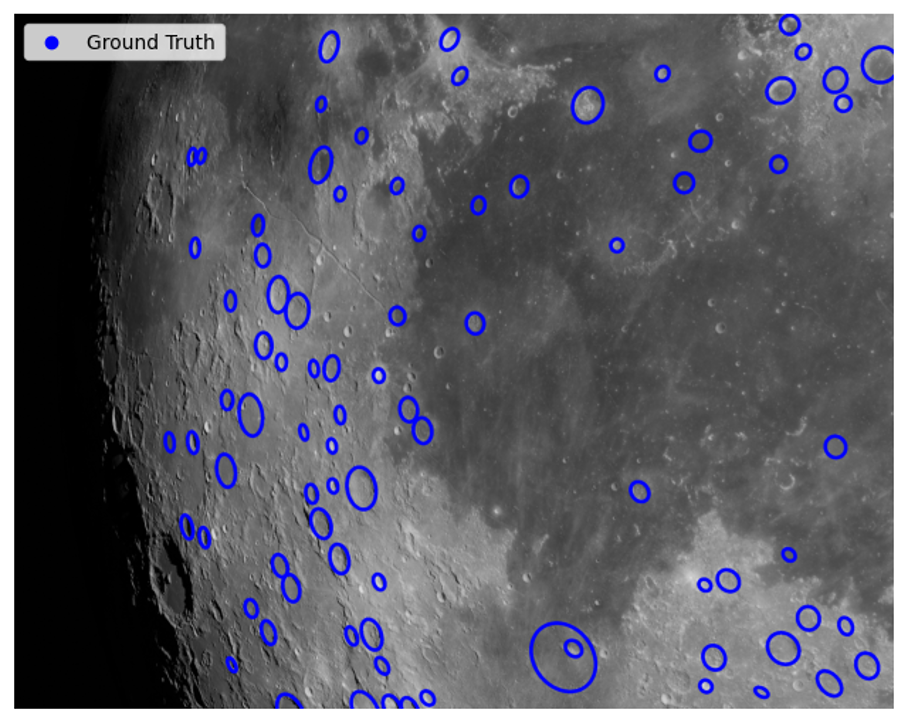

We used a synthetic lunar crater dataset developed by NASA (via the Boromir engine), based on the crater catalog by Robbins (2019).

🗂️ Composition:

- 1,000 grayscale images (2592×2048 px)

- Paired JSON metadata: camera pose, sun angle, etc.

- Dual label formats: YOLO (bounding boxes) & EllipseRCNN (elliptical params)

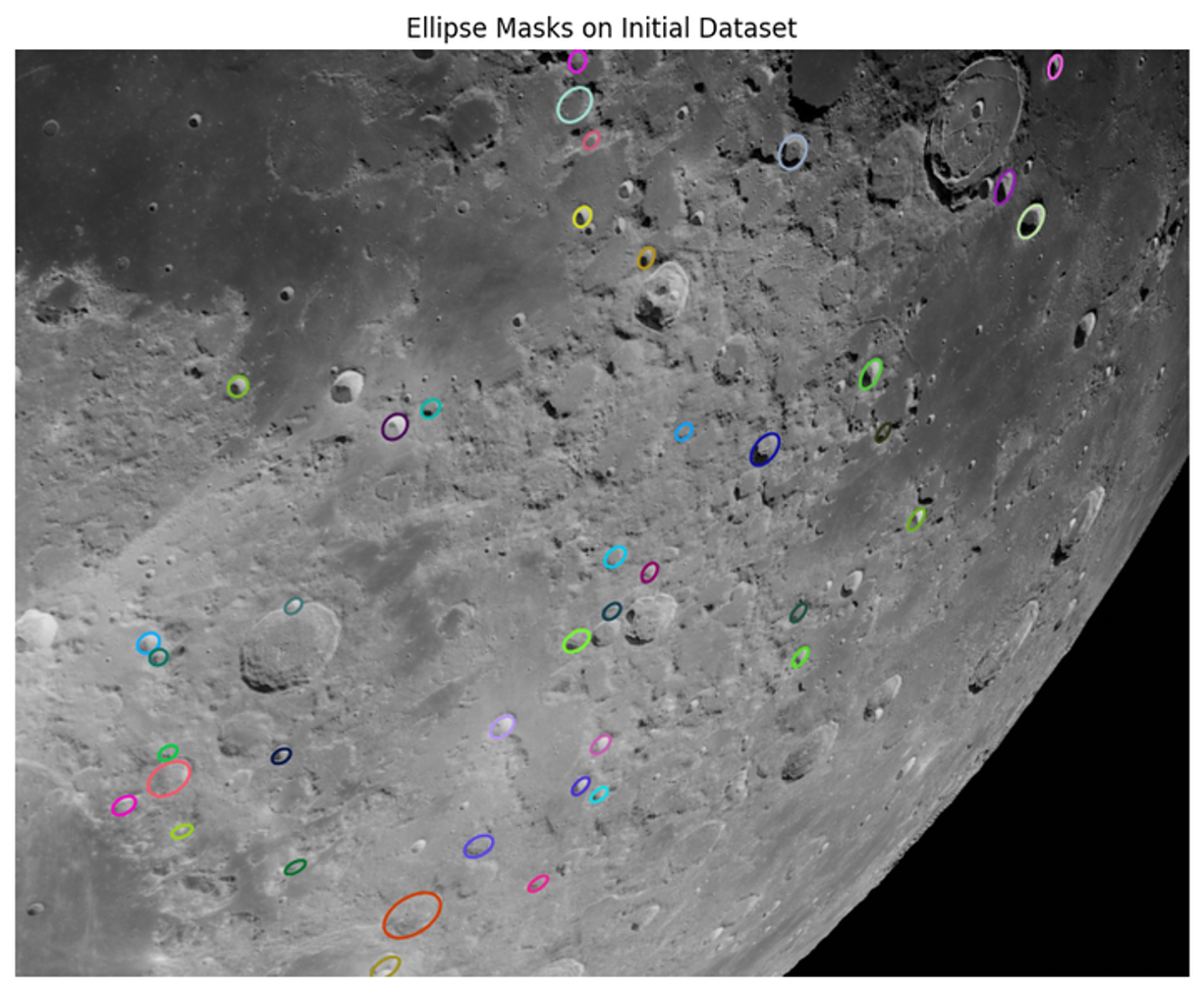

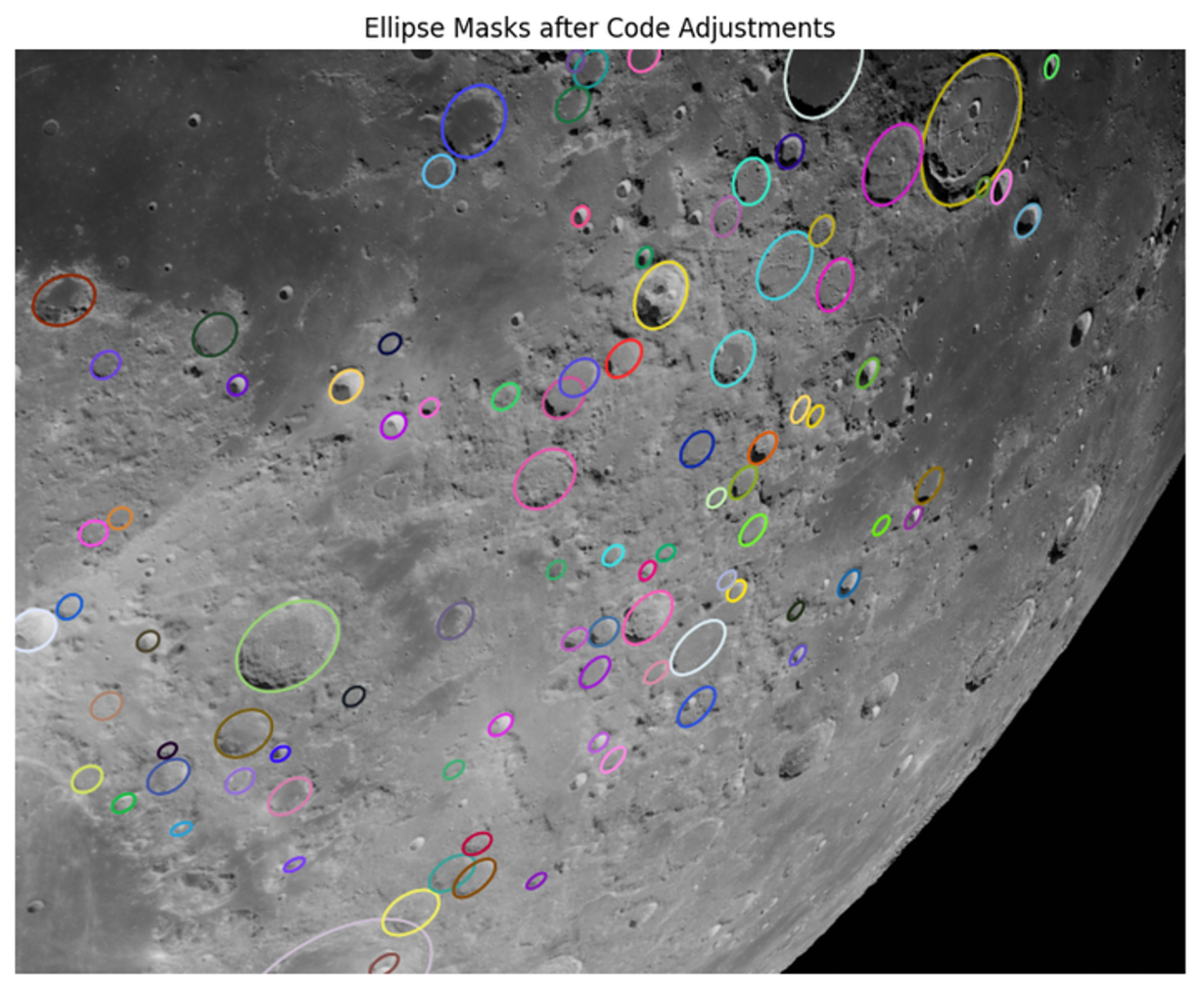

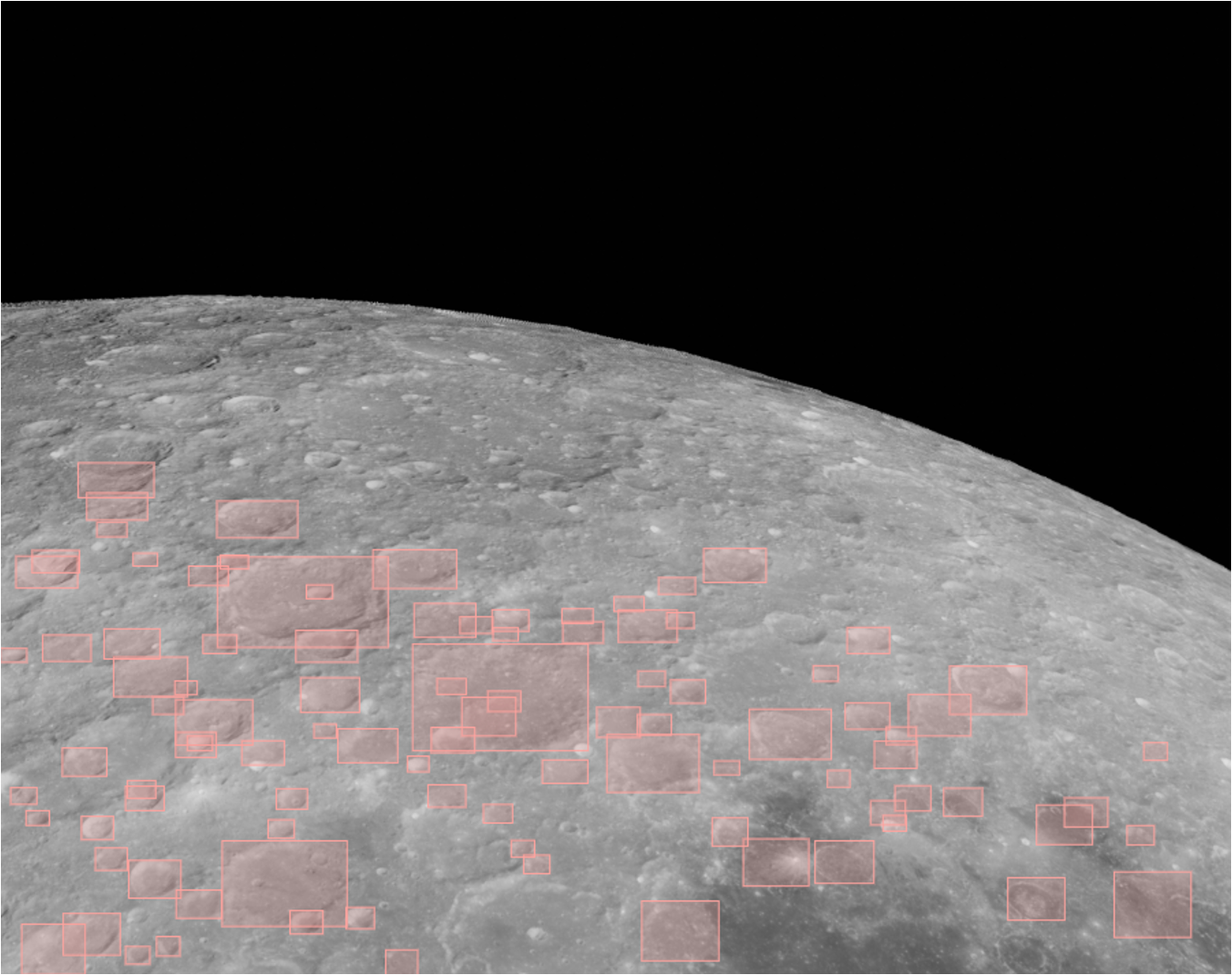

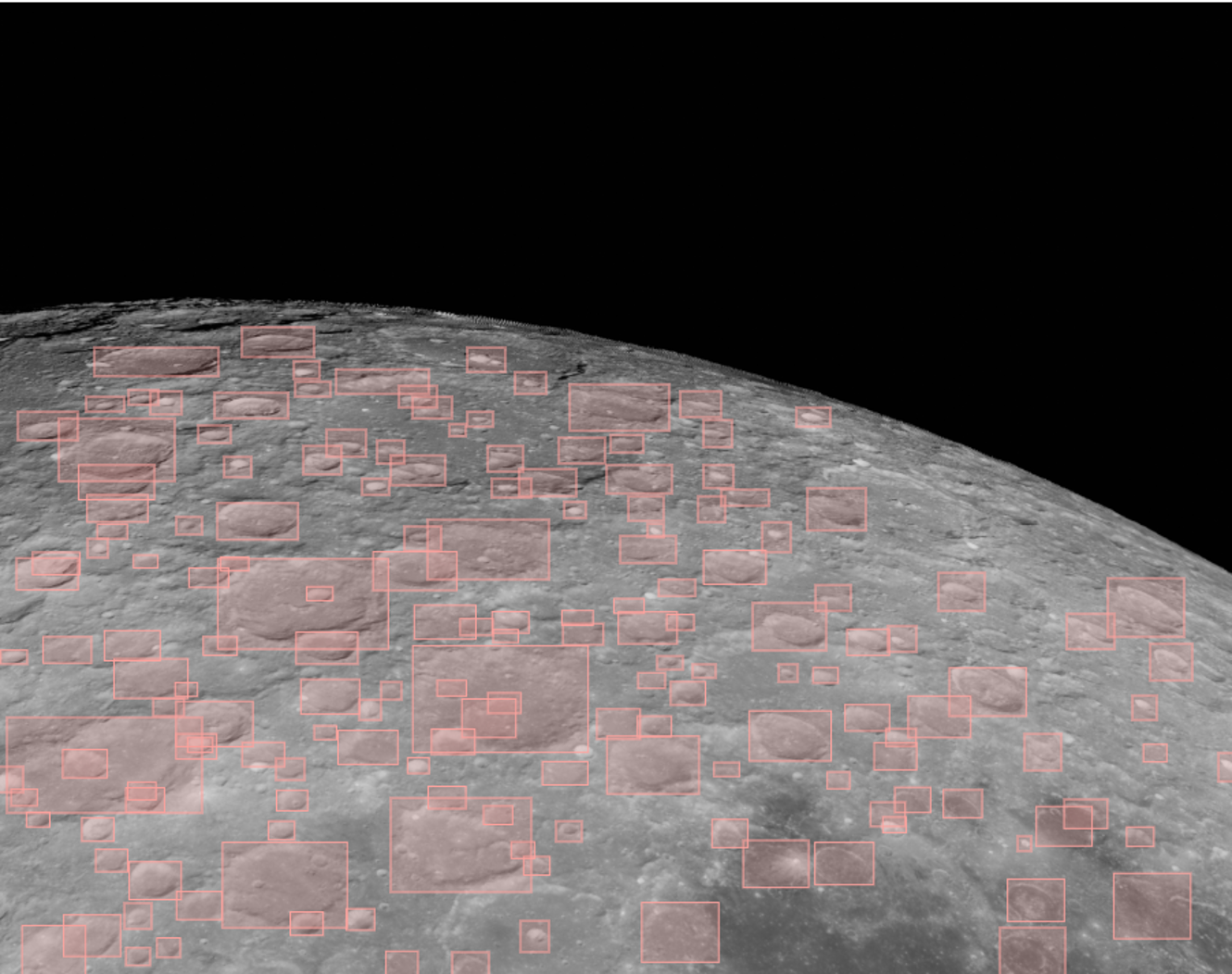

🖼️ Dataset Preview

⚠️ Challenges & Fixes

- Misaligned masks from auto-generation

- Extensive manual refinement using Label Studio

- Ellipses converted to bounding boxes for YOLO

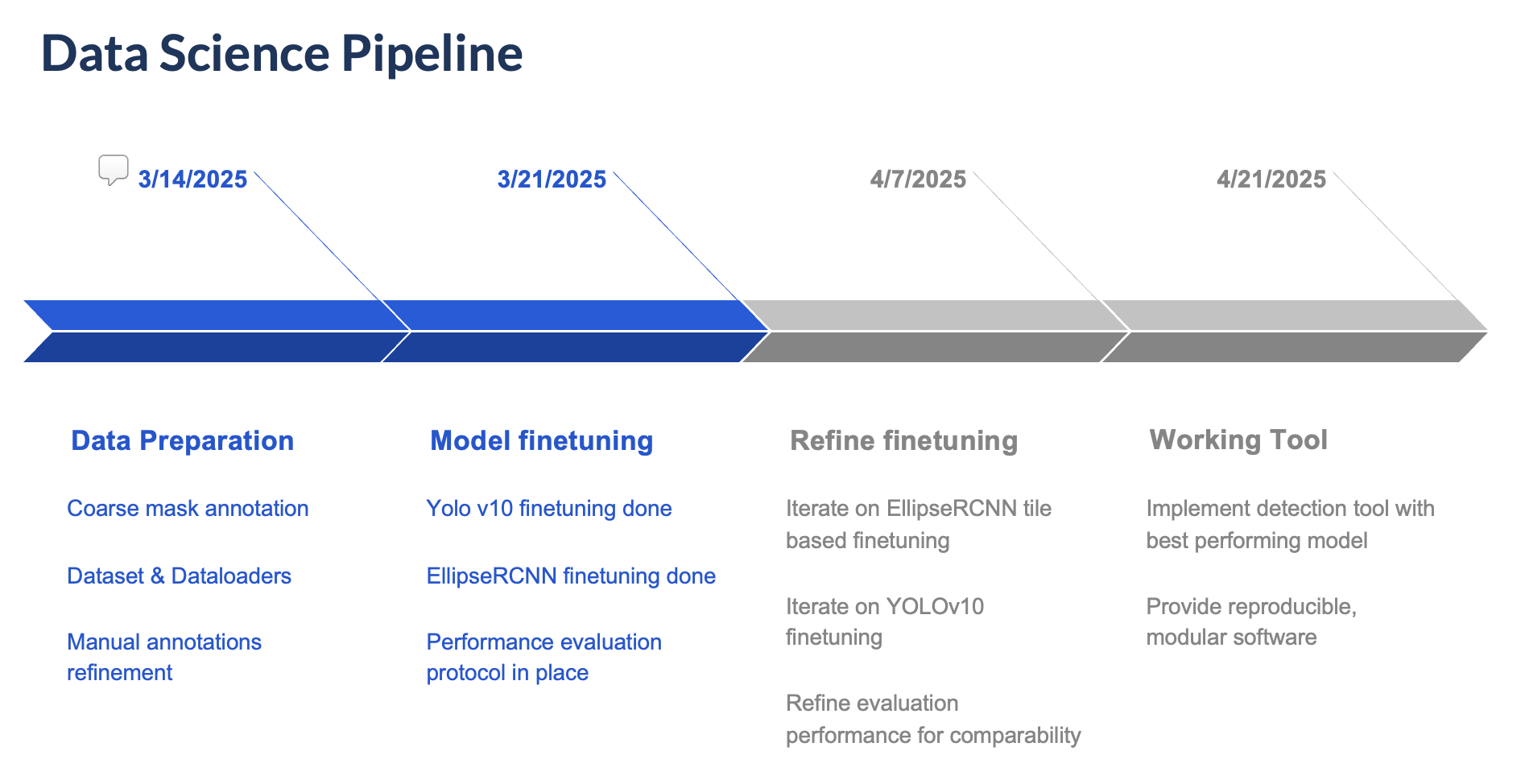

4. Experiments

🧪 Train/Test Split:

80/20 for both models

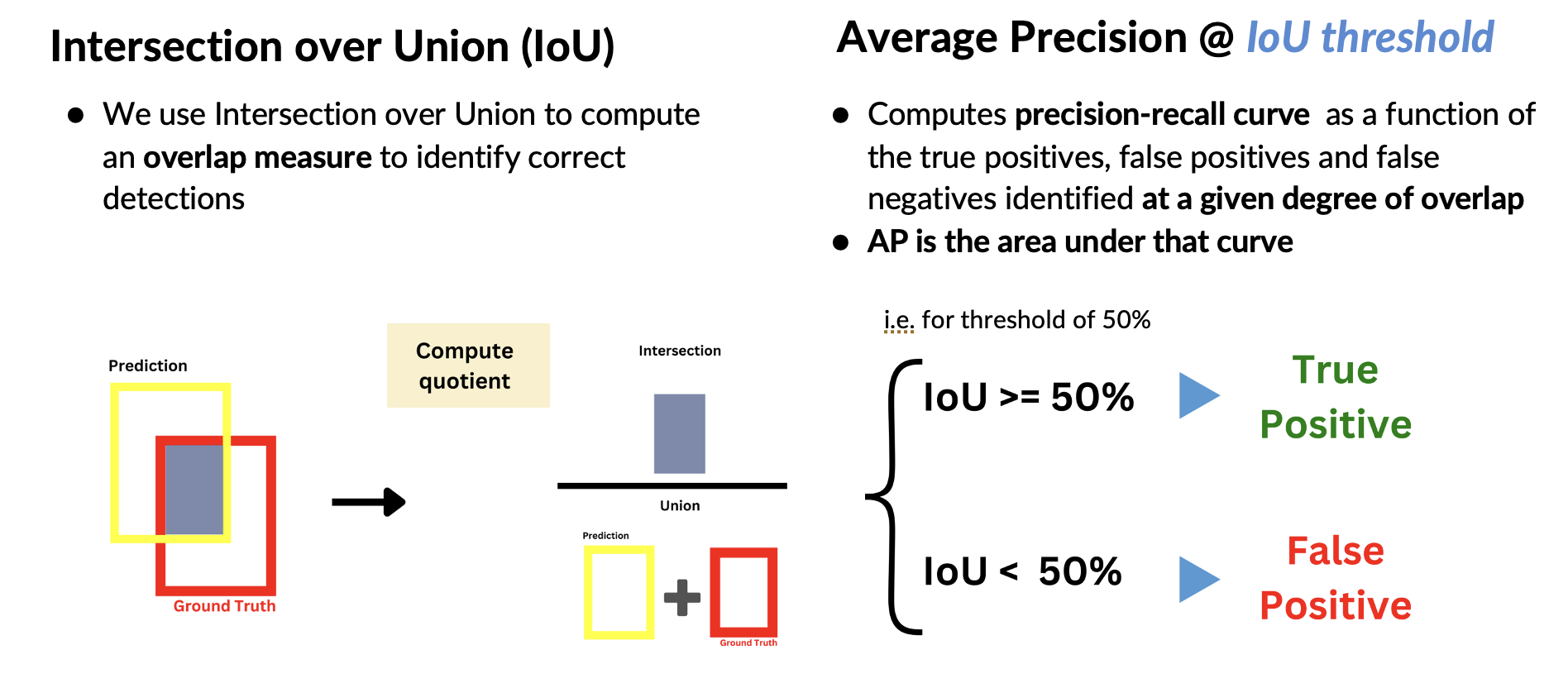

Evaluation Metrics

We use Intersection over Union (IoU) and Average Precision (AP) from PR curves to assess model accuracy and ranking.

YOLOv10 📦

- Model:

YOLOv10m(mid-sized, balance of speed/accuracy) - Trained for 100 epochs using Ultralytics

- Images resized to 640×640

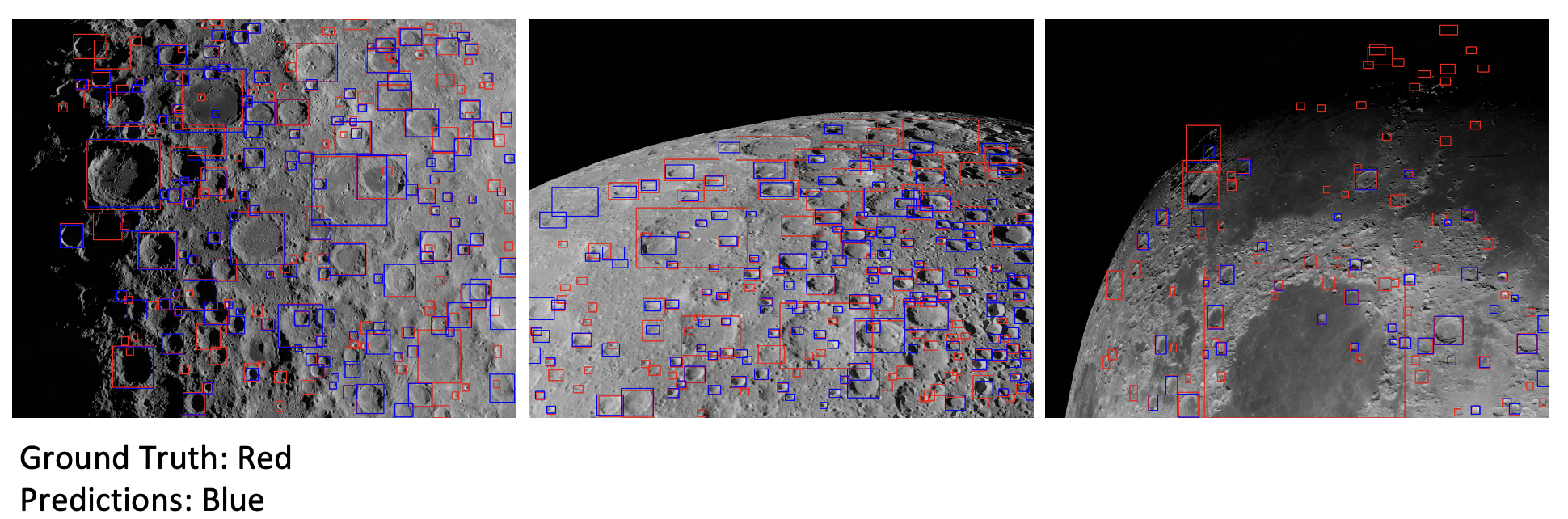

Results:

- Precision: 65%

- Recall: 51%

- mAP@0.5: 57%

- COCO-style mAP (0.5–0.95): 34%

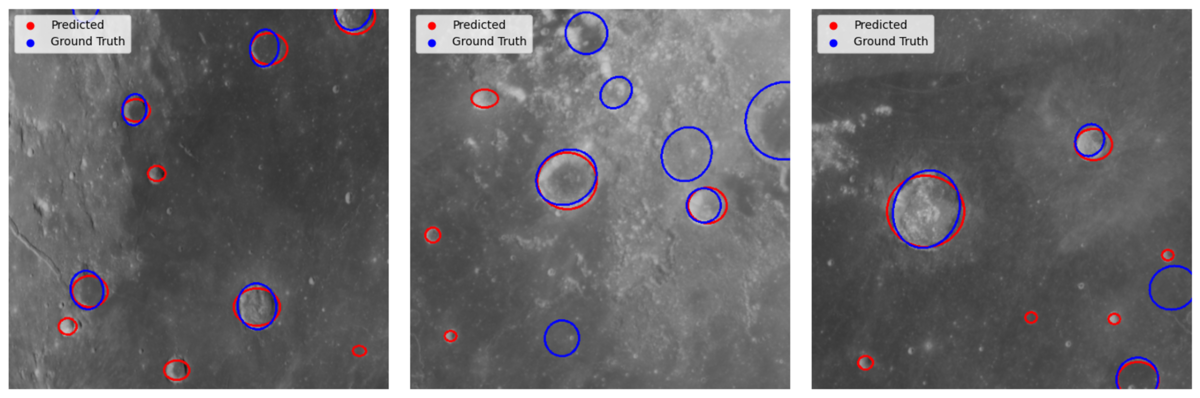

Ellipse R-CNN 🌀

- Backbone frozen, trained only on proposal, regression & occlusion modules

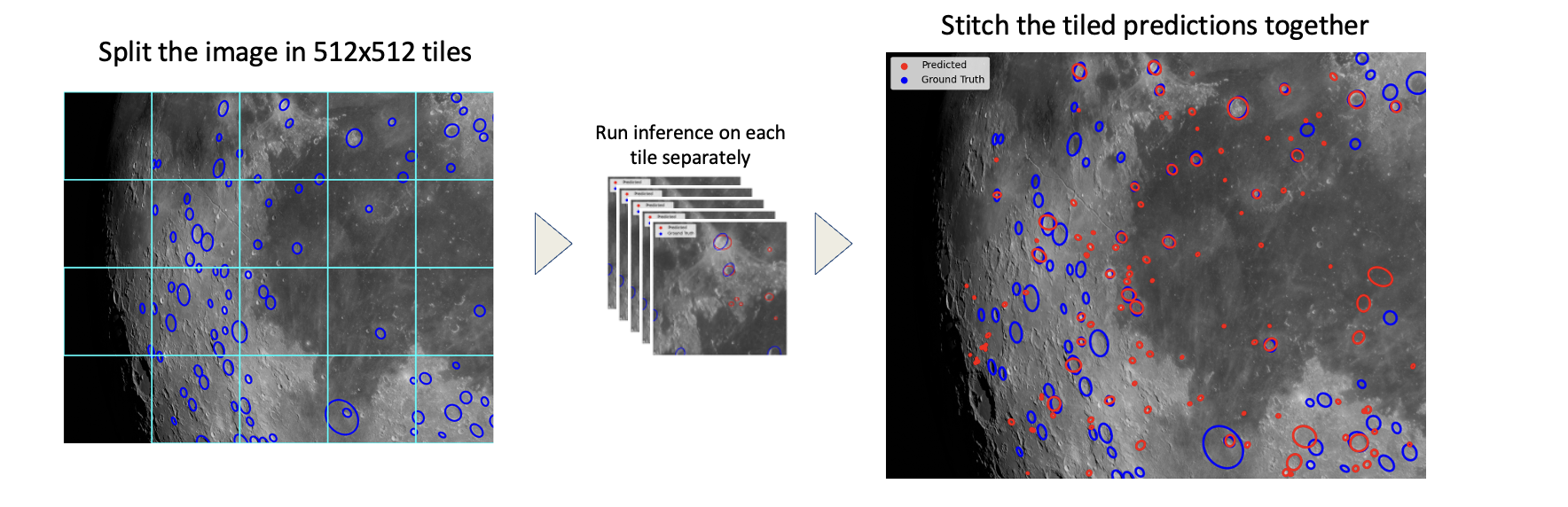

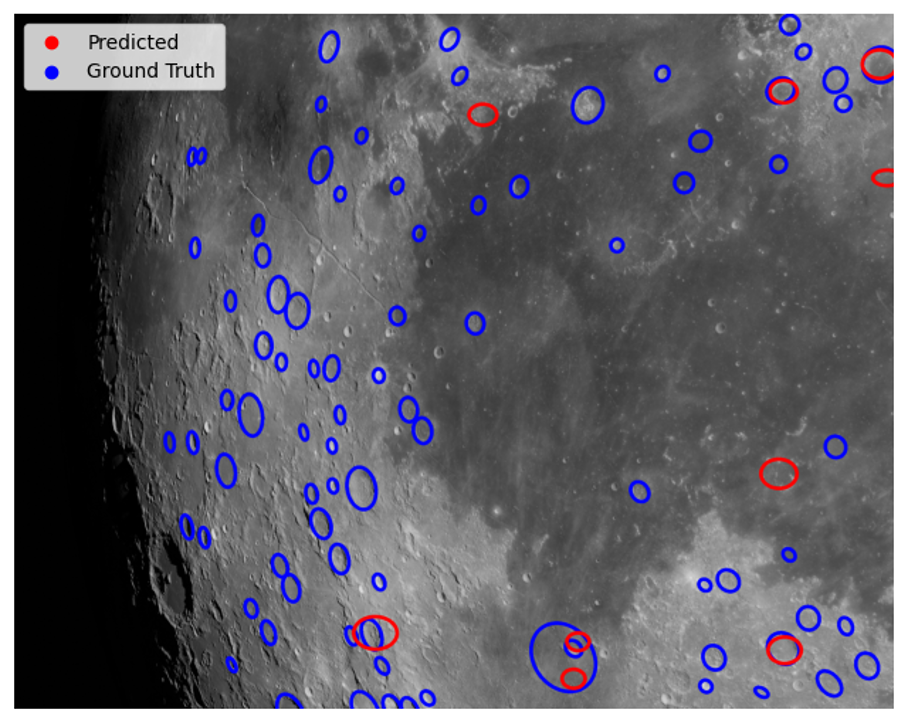

- Tiling (512×512) significantly improved detection

- Trained for 40 epochs, batch size 32

Results (on hold-out 100 images):

- mAP@0.5: 17.2%

- mAP@0.7: 9.9%

5. Next Steps 🧭

🔄 Evaluation Alignment

Define consistent dataset splits across YOLO and EllipseRCNN to ensure fair model comparison.

🔧 EllipseRCNN

- Apply tiling during training

- Explore optimizers, learning schedules

- Address patch edge inference issues

⚙️ YOLOv10

- Use full 1000-image dataset

- Add tiling + augmentation

- Train for 300 epochs

- Try larger YOLOv10 variants

- Apply same eval metrics as EllipseRCNN